Does it make sense to integrate AI into your game's content production pipeline?

How to integrate AI into the content production pipeline – and does it even make sense to do so?

Seems like AI is everywhere now, no escaping it. So, we at Pixonic (MY.GAMES) decided to experiment a little and find out if it makes sense to integrate it in the content production pipeline of our game War Robots. It’s a multiplayer shooter that functions according to the LiveOps principle; it’s constantly updated with content: new robots, pilots, events and modes. Accordingly, having the correct content pipeline plays an important role in a process like this.

In this article, we’ll tell you how the workflow on the War Robots project was originally structured, how we tried to incorporate AI, and about the results of our efforts.

This text was created by Dmitry Ulitin (Senior Game Designer at War Robots) and Ekaterina Kononova (former Game Designer at War Robots).

Disclaimer: Art generated by neural networks for War Robots development is used only for references while searching for ideas. None of these artworks make it into the final game.

War Robots pilots

The pilots in War Robots are a very important part of the game. In general terms, each pilot is a game entity that the user sees as a 2D character. The pilot can learn skills that strengthen the player's robot, and each of them has their own biography and place in the War Robots lore. In many ways, we develop the world of our game and tell stories through the pilots themselves. And, of course, pilots don’t pop out of thin air; painstaking work is carried out on each of them.

Here's how everything is usually arranged from the game designer’s side (hereafter referred to as the GD):

GD comes up with a pilot ability

GD orders a pilot portrait

GD writes a pilot bio

Now, coming up with pilot abilities is a separate process; the GD needs to take into account the game’s meta and a bunch of other different details. So, in that case, we can’t hand it over to AI. So, in this article we’ll stick with points 2 and 3, the pilot portraits and bios.

Pilot portraits

The flow without AI

Our normal process for creating each pilot's profile begins with the character's history and background. Usually, the most important thing is to have a rough idea of the character's age and some distinctive features that would make them stand out. For example, features of clothing or accessories that indicate they belong to a particular faction.

If, at this stage, the GD does not have a 100% complete vision of the hero, it’s not that big of a deal. After that, the art department gets involved in the process, and an artist may also make adjustments to the character and appearance, if there are no critical objections to this.

So, before we tried to integrate AI into our work, our GDs used the standard sources to search for references like Pinterest, Google, and ArtStation. As an example, let's look at how we came up with the image of one of the pilots in our game, Misaki Ueno. Here’s the description of the heroine:

Appearance: A beautiful girl similar to Tifa from Final Fantasy VII. She has a cyborg dog. Dressed in Evolife armor, a WR-style obi belt is tied over the armor. A round fan with the symbols of event 8.0 is stuck into the belt.

Personality: Appearance is sweet and friendly, but she’s very attached to the dog; it seems she has a special connection with it.

Face and hairstyle: Anime-style beauty (again, like Tifa from FF). Aquiline nose. Brown hair tied in a ponytail or bun. Slight bangs.

Clothing: Evolife Armor. An obi belt is tied over the armor; the belt is wide, with a bow at the back or front, and long ties. The armor material is chitin.

Dog: Large cyborg dog. He looks like a Shiba Inu or a wolf from Princess Mononoke. Part of the muzzle has been completely replaced by cyborg parts, he has a cyborg eye, but there's still some signs of “dog-ness” left in him. Or, you can make the dog completely a cyborg, but something in its look should hint at its intelligence.

Pose and facial expression: A cyborg wolf dog sits in front of the pilot. One of the pilot's hands lies on the dog's paw, the other on his head. The pose is relaxed, playful. She winks and holds up a “peace” sign.

After this description is done, the artist begins to create the image of the character. First, a sketch is created, with several iterations, wherein we usually look for the most suitable pose. After that, we test color combinations. Next, after there is a basis for the image, a 3D model is created for the heroine, we determine a pose, and the lighting. All this is rendered, and on at last, the artist adds the fine details.

And here’s the final result:

Our flow with AI

Recently, and with more frequency, when searching for references, GDs and artists have begun to use AI. Let’s discuss how it’s used in our case, and where AI comes into play in our process.

Ordering art proceeds according to the usual process as mentioned earlier: a GD comes up with a character, and if necessary, an artist gets involved.

But, after we tried to integrate an AI into the pilot development pipeline, the search for references began to look like this:

We look for initial references on Pinterest, Google and ArtStation

If something is missing, we generate references using AI and/or modify those found on the Internet

For example, let’s take the character of Monique Lenormand and see how we looked for references the traditional way vs. with AI help.

Pilot features:

A confident, slightly hot-tempered young woman, no older than 30-35

A mix of poor punk bandit and red-haired fortune teller who always protects her friends

Trope: Action Girl + Fiery Redhead

Strong focus on esotericism and jewelry

Personality: Eccentric, emotional, straightforward, a little aggressive.

Faction: Formerly Icarus, now on her own.

Appearance: Red-haired, we even could call her a red-haired fortune teller. Not old, but not very young either. She has all sorts of trinkets and amulets on her. For clothing, you can take the poncho from the Icarus faction as a basis and tear it up a bit making it more punk-looking, reminiscent of wings. You can also use Tarot cards in some unusual way (in the character’s hands or clothing).

Here are references we collected in the traditional way, from our game and open sources:

In refs we used Misty from Cyberpunk 2077 as an image of a modern, popular fortune teller.

Now, here are the references we got from AI:

As you can see, they didn’t turn out to be the highest quality, but you can still grasp the main idea. What's the matter? There are several reasons:

The model chosen is not optimal for the job; out of the box, the original SD 1.5 doesn’t generate very high quality results

The prompt used to generate the image was not optimized; it had too many styles of poorly compatible artists, and the words lacked meaning behind them

A negative prompt wasn’t used (this is when you not only tell the AI what you want to see in the final result, you also say what you DO NOT want); this can greatly affect the result quality, especially in terms of anatomy

Additional options for adjusting the request (ControlNet, embedings, LoRA, and so on) were not used

As a result, we adjusted our requests and received a new reference from AI:

It's not perfect, of course, but it's much closer to what we had in mind. And that's why:

We used a more suitable model and a better prompt

We have entered a negative prompt

We used negative embeddings: EasyNegative, BadHands

We used image2image and ControlNet preprocessor t2i_style_clipvision with t2iAdapter_style model to combine multiple references

And here’s our final character: Monique Lenormand. The artists drew it entirely by hand, and used both open source and AI images as references.

How we use visual AI to create pilots now

We could say that we continue to partially use AI to generate references when ordering art, but not all GDs use it. For example, AI is useful when it’s difficult to find the desired reference via Google or if you need to combine several specific things and see an approximate final result, as in the example above, what a sci-fi fortune teller will look like.

Also, an AI-created reference can help shape the general image of a character at an early stage; this is especially true for fiction settings like in War Robots.

So in this case, AI doesn’t replace an already formed pipeline, but rather, it complements it (and it’s optional, really). Generating AI references isn’t necessary, but it’s a must to look for references using traditional sources.

Pilot biographies

The flow without AI

Before, the process of writing a biography from the GD’s point of view looked like this:

Based on the theme of the event the pilot will be released during, the GD comes up with the main idea for the pilot. Determines which in-game faction the character belongs to, the archetype they have at the top level (in other words, a positive or negative character)

GD looks for inspiration in films, TV series, literature, TV tropes, and even historical events

GD writes the text of the biography, which usually goes through several iterations

Since War Robots is not a narrative-driven game, there is no dedicated narrative designer on the project; every GD on the project is a generalist and should be able to do a little bit of everything. But beginners usually spend a lot of time working with texts, because they’re not used to doing it.

We are still trying to optimize this process; here are some upcoming plans:

Standardize the workflow on the biography, clearly describe all the stages and subtleties

Create a guide for GD with sources to take ideas from; this will save time

Set pilot character types at the release planning stage

The flow with AI

Pilots are additional content in the game through whom players are presented with the plot of our world. Each individual story fits into the overall story, and every pilot who has his own unique story is considered “legendary.” And this is not just a nice word to describe them, but rather a feature: the character, through their small plot, must make it clear to the player that they have qualities and abilities (including in-game abilities) that make them legendary.

For each story we have specific goals; GDs may deviate from these goals to implement some of their creative ideas, but usually the pilot has certain criteria:

The pilot must belong to a faction within the game

The pilot must be synchronized with the event during which they appear

Their biography should contain hints about the piloted robot or the ability that this pilot has

By the end of their in-game biography, the character must be relatively free to get to the player's hangar.

And there’s the rub; all these goals and inputs are in the GD’s head, but the AI doesn’t have this context. This raises several challenges of story generation when using AI.

The GM still needs to know the basic story of the game and character

Every character biography begins with a thesis, a brief summary of how and where this character appeared, how they became legendary, and what happened to them at the end of the story. This is the foundation, which is then enriched with further details. The problem is that AI cannot build such a foundation, because it needs to know the history of the War Robots world – but entering the entire context into AI is quite problematic. Therefore, at this stage, a GD cannot delegate the work to the language model.

Proofreading each iteration of the story and edits

Let's say we already have the foundation and we’re ready to delegate it to AI. In this case, we have another problem. The GD has to read each version of the story, with a very detailed context, and make corrections. Proofreading each iteration takes time, and on top of that we must add time for analysis and edits.

Edits, edits and more edits

In fact, GDs spend all their time on edits when working on a story. Even with a very detailed context, an AI can come up with something unsuitable for a particular pilot.

For example, we tried to write a test story using AI about an ordinary pilot who is afraid to get into a robot. The AI came up with the idea of a hand injury, an inspiring speech at the end, and also added a lot of unnecessary details. As a result, the GD had to spend a lot of time taking out unnecessary details and correcting the result.

Time costs

At this point, everything starts to bump into the time spent on the story since editing a story generated by AI takes longer than editing a story made by a GD.

As a result, it’s not possible yet to optimize the process with AI. But everything might work out in the future if the neural network is trained to navigate the game’s story and context. At a minimum, AI could be used to generate a basic story, which a GD could then develop. But now the classic process results in better-quality work and takes less time.

How we now use text AI when writing biographies of pilots

AI is convenient when searching for ideas, the right wordings, and narrative structures. That said, AI is still not able to generate the desired text in the snap of a finger.

This might be possible if most of the work has been done by a person, namely, a GD makes a list of details that should be mentioned in the text, the intended style, and so on. However, writing texts on our project isn’t limited by strict rules. Therefore, there is room for creativity, and a lot is decided at the level of GD; most of these decisions are made during the writing process. So, AI is now more like an assistant that can help you through a huge number of iterations to arrive at something very vaguely similar to the final result.

The robots in War Robots

The robots themselves comprise the main content in the game War Robots. They are the basis on which all builds are made: a robot has its own abilities, which the player then complements with their gun and pilot skills.

Work on a robot usually goes like this:

Researching a mechanic to understand what the game may be missing, something that will help add variety to the meta or somehow balance it

The concept of the robot, its abilities, class and distinctive features

A GDD of the robot: deeper work on all mechanics, their interaction with other content, more emphasis on visuals, selection of references for concept artists. You can read more about how we write GDD here.

Pre-production

Release

Operation and support

When using AI in the robot creation process, it was necessary to determine where exactly the new technologies could be applied. We immediately excluded the last two stages, since pre-production and content operation still require people and it’s not yet possible to optimize that work using AI at all.

The research and concept stage

Usually, GDs start researching the game and try to embed a new robot in a way that will be useful for the current meta and that will appeal to the players. All thoughts and ideas are recorded in a separate document with visual examples of what the new mech would look like.

In theory, at this stage AI could help:

Generate ideas for new robot abilities

Generate ideas for robot’s appearance

Completely valid considerations. But it all comes down to the time it takes to create a concept. This is roughly the same problem as with writing a pilot's story: for a normal result, you need to give the AI a lot of context, but this is difficult and takes a ton of time. And at this stage, the loss of time is critical, since work is already underway, and any delays have a pronounced effect on the subsequent flow.

Moreover, GDs will have to train the AI on context anew each time, or add new info to the existing knowledge base. So, AI at this stage isn’t very useful; the old work formats are much more effective. Finding an approximate visual reference on Pinterest and drawing ideas from old robot abilities, adding some elements from other games we play to them – this is still much faster than generating it in AI.

The GDD generation stage

At this stage, the GD begins work on documentation and development of the main gameplay part. This usually takes a lot of time: you need to describe in great detail how the content will be technically implemented in the game.

Here, AI can play the role of Google and help, for example, generate titles or names. This method has already proven its effectiveness. For example, when coming up with a name for a robot, you can ask AI for 10 names of gods or some specific technique - and quickly find a lot of options for a possible naming of the robot. Otherwise, GDD is written according to a template and it’s impossible to optimize other parts. Besides the art. But this happens in the art department, and not in game design.

When we fully describe a robot, the art department begins work on the model. Here GDs and the art department interact very closely and decide what style and design will suit the new robot. And you can easily use AI in this process.

Since War Robots has a class system, the art department already has some ideas about what a particular robot should look like. If we make a tank, then it is large and massive. The saboteur is light and fast, and so on.

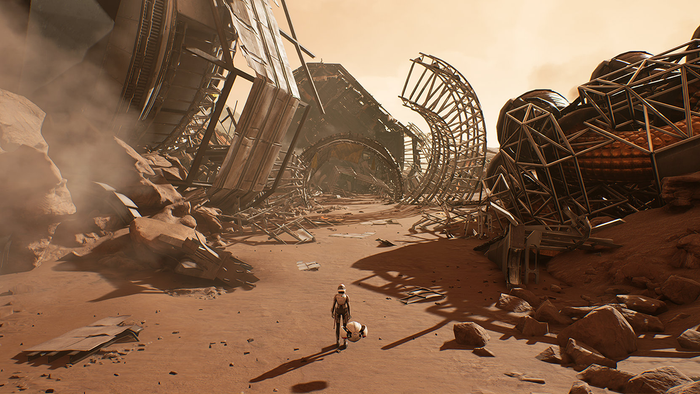

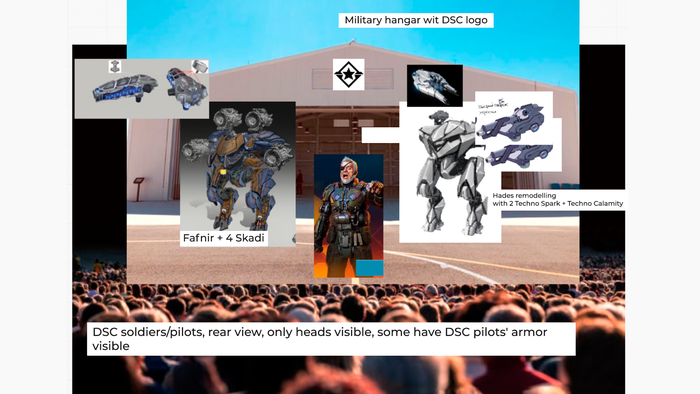

Below is what the GDD section with references for art might look like; let's take the Titan Rook as an example.

Refs for Rook’s visuals and shiels placement

Working with art and AI

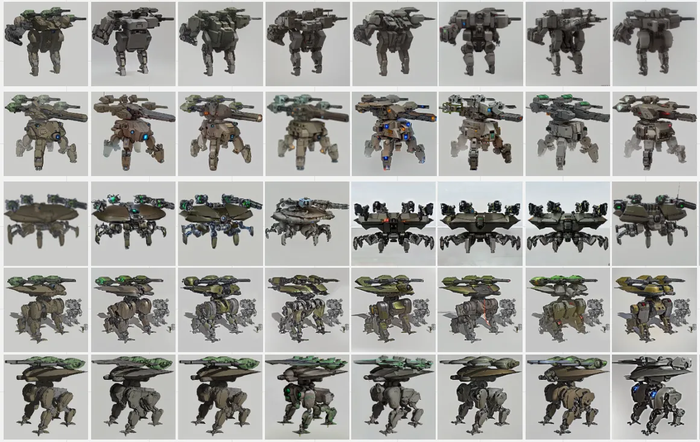

Our art flow is iterative. First, the concept is drawn → then it’s shown to the game design department → edits → then again shown to the game design department. The initial concept is always drawn based on GDD and the concept, and this is where AI comes into play.

With the help of AI, and having a concept document in hand, the art department can immediately put forward many options for evaluation. In this case, a GD can only “like” the elements of the robot or entire images. After this comes a sanity check for the result and subsequent edits. This is done without AI, via the art department.

Here’s what it looks like

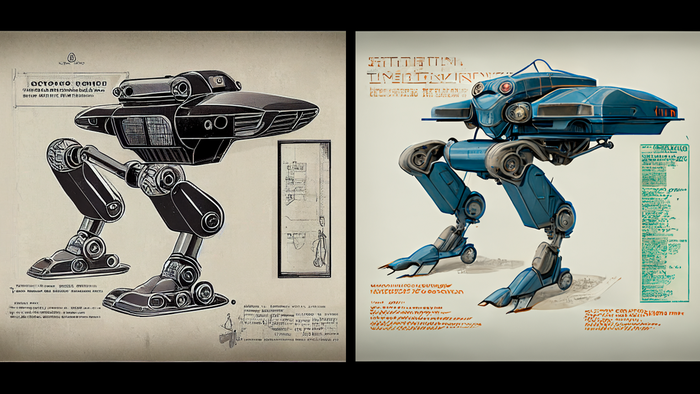

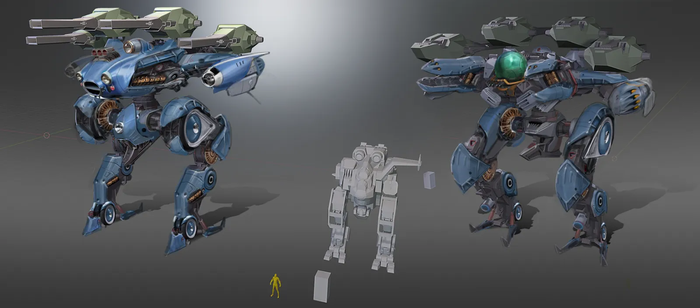

Sometimes, although it is rare, a GD manages to find a clear reference for a robot’s appearance using AI even at an early stage. For example, there was a task to remodel an existing robot in a retro style, and we generated this reference for the art team.

This is what we got when we made the actual in-game remodel based on previous refs:

How we use AI when creating robots

Text based AI can already help with quick Googling and for generating a large number of options based on given criteria. But deeper work requires immersing AI in context, which is time-consuming and labor-intensive.

Visual AIs are used for idea searching at the initial stages. Using them, a GD can add more design ideas to the concept document, and, at the concept stage, the art department can offer a large number of options at once. So, this will help you find the right design.

Events and splashes

In our game, splashes are pictures that are updated with every event and show new content. We have two new release splashes that replace the old ones.

The splash flow looked like this:

We determine the style of the event and the type of content that will be released

GD figures out how to combine content and style into two pictures (and perhaps add some kind of narrative to them)

An art task is created, after which the art department draws splashes

This flow looks to be very simple, and, from GD’s point of view, AI could be effectively used at all stages. But, as usual, there are some nuances worth considering here.

Event style

As with writing a GDD for a robot, here you can ask AI for ideas and form some styles and narratives based on those. AI copes well with requests like “give me 10 ideas...”, and then a GD can then process them.

It’s important to note that, in this case, you should not rely completely on AI, and each result should then be further checked. At this stage, text based AI can be used as a quick Google substitute that will find and structure many search options at once. Otherwise, writing and thinking through an event is restricted to GDD and is difficult to optimize.

Idea for images

When the style and content of the event are already known, we start working on ideas for art. In a normal situation, a GD goes to Pinterest or another resource where they can find visual references. We can stop at this point, but sometimes a GD needs to convey their idea as accurately as possible –- then we use additional tools like AI.

We convey the idea

Sometimes there is some kind of narrative tied to the picture. Or there are some new elements in the picture that haven’t been used before - and the artist needs to convey the idea as clearly as possible, but they fail to find the necessary references on Pinterest. In this case, our department uses the following tools:

Collages. A GD must have minimal knowledge of graphic editors. A collage allows a GD to quickly incorporate on one picture all the elements that will need to be conveyed in the final art.

Reference of a splash made in the form of a collage, and the final version

In-engine screenshots. A GD places all the robots in the engine the way they should be arranged in the art.

A reference made in the engine — and final art

Visual AI. Using keywords, a GD entrusts the creation of the reference to AI and adjusts the result to the idea.

Ai generated references and the final art, drawn by artists based on references

How we use AI for creating event splashes now

Text based AI still does a good job of quick generation of references, which help you decide on an idea much faster and start writing documentation; here, it easily replaces Google.

Using tools to convey ideas for art generally takes about the same amount of time. But sometimes it’s faster and more convenient to enter a couple of keywords into AI and see the result. These refs are used in documentation and when drawing up technical specifications for the artist.

Results

In terms of searching for visual references, we’re quite comfortable using both classical sources and AI. In the case of robots and splashes, AI references are fully used in documentation and when drawing up technical specifications for the artist. They don’t always offer any interesting details to pilots; for this it’s more convenient to turn to “real” references. But if you need to quickly work out some basis for an image, AI can help save time.

However, it’s a little more complicated with texts. If you just need to quickly Google something and come up with a lot of options based on given criteria, it’s convenient to use AI. But, so far, it’s not been possible to fully optimize the story creation process: the AI simply doesn’t know how to navigate the story of the game and the general context. If this changes, AI could be used to generate a basic story, which a GD would then edit. But, for now, the classic way of working yields better results and takes less time.

Read more about:

BlogsAbout the Author(s)

You May Also Like